Migrate Native RDBMS Data Sources to OCF Connectors¶

Alation Cloud Service Applies to Alation Cloud Service instances of Alation

Customer Managed Applies to customer-managed instances of Alation

Use the instructions on this page to migrate your native data sources to Open Connector Framework (OCF) Connector. A native data source is an RDBMS source that is cataloged in Alation using one of the native (built-in) connectors. On the latest version of Alation, all native connectors have the corresponding OCF connector alternative. You need to check connector availability for older versions. Find more information in the Version 2023.1 for your Alation release.

- Native data sources can be migrated to the OCF connector using one of the following methods:

Bulk Data Source Migration (recommended)

What Is Migrated¶

The following information will be migrated from the native (built-in) data source to the OCF data source:

Source ID—The data source ID will remain the same as before the migration.

Data source settings—Connection, metadata extraction (MDE), profiling, query log ingestion (QLI), and Compose configurations.

Physical metadata of the source such as schemas, tables, attributes, projects, and other previously extracted metadata. If needed, a Data Source

Admin can re-run extraction after migrating to the OCF connector.

Logical metadata such as values of the custom fields, data quality flags, @-mentions in the custom fields, @-mentions in articles, the title and description, stewards, tags, top users, and fields shared through catalog sets.

Saved searches.

Sampling and profiling data.

Catalog information generated from QLI (Top Users, Popularity, and JOIN and filter information).

Lineage information.

Querystatements ingested from Compose.

Prerequisites¶

Before performing the migration, ensure you fulfill these prerequisites:

We do not recommend migrating your data sources before you upgrade Alation to 2023.1.5 or newer.

Install the required OCF connector on your Alation instance. See Manage Connectors (on-premise) or Manage Connectors (Alation Cloud Services) for directions. Make sure you are installing the latest available version of the connector.

We recommend saving the information in all sections of the data source settings (Access, General Settings, Metadata Extraction, Data Sampling, Per-Object Parameters, and Query Log Ingestion) or taking screenshots of the corresponding tabs. You can use this information after the migration to validate that all the values were migrated correctly.

Make sure that the MDE, Profiling, and QLI jobs are not running while you perform the migration.

SSL certificates for data sources that were previously uploaded to Alation are not migrated if you migrate your data sources in bulk. You will need to re-upload them after the migration. Make sure you have the SSL certificate files for your data sources at the ready. However, if you migrate a single data source, the SSL certificate should be migrated and re-uploading will not be required.

Note

User certificates for Google BigQuery data sources will not be migrated. Make sure you have the user certificate at the ready.

Limitations¶

Data sources with Kerberos authentication cannot be migrated using the bulk migration method. Even if you include such a data source into the migration payload, it will be skipped.

SSL certificates will not be migrated during bulk migration. Users need to upload the SSL certificate manually post migration.

User certificates for Google BigQuery data sources will not be migrated. Users need to set up the OAuth configuration manually after the migration.

Sampling in Query Log Ingestion is not supported in OCF. If Sampling in QLI is enabled in the native source, the QLI will fail after migration.

Sampling fails after migration to OCF. To workaround this issue, run the Metadata Extraction before Sampling.

- For Alation version 2023.3.4 and prior:

Data sources with Kerberos authentication cannot be migrated. You must manually enable the Kerberos authentication in OCF.

Synonym extraction scope is not migrated. It is enabled by default in the OCF.

System schema extraction scope (default schema objects) is not migrated.

Bulk Data Source Migration¶

Supported Data Sources¶

The data sources listed in the table below are supported for bulk migration to OCF connectors:

Native Data Source Name |

Equivalent OCF Connector |

|---|---|

Azure SQL Data Warehouse |

Synapse OCF Connector |

Google BigQuery |

Google BigQuery OCF Connector |

DB2 |

DB2 OCF Connector |

Databricks |

Databricks OCF Connector |

Greenplum |

Greenplum OCF Connector |

Hive2 |

Hive OCF Connector |

Impala |

Impala OCF Connector |

MemSQL |

MemSQL OCF Connector |

MySQL |

MySQL OCF Connector |

Oracle |

Oracle OCF Connector |

PostgreSQL |

PostgreSQL OCF Connector |

Redshift |

Redshift OCF Connector |

SAP Hana |

SAP HANA OCF Connector |

SAS |

SAS OCF Connector |

Snowflake |

Snowflake OCF Connector |

SQL Server |

SQL Server OCF Connector |

Sybase IQ |

Sybase IQ OCF Connector |

Sybase ASE |

SybaseASE OCF Connector |

Teradata |

Teradata OCF Connector |

Vertica |

Vertica OCF Connector |

Perform Bulk Data Source Migration¶

The bulk migration method can be used if you want to migrate more than one data source from a native connector to the corresponding OCF connector. Bulk migration is recommended when you have a large number of datasources of the same database type (e.g. MySQL) and would like to migrate these in one go. It is only supported for RDBMS native data sources.

Bulk migration can be performed using the Public API method.

Note

Bulk migration is the recommended method for users to perform migration from native connectors to OCF.

Public API¶

Bulk migration using the public API can be performed on both Alation on-premise and Alation Cloud Service instance by the Alation users with the Server Admin role.

To use this method you need to generate an API token using the steps mentioned below:

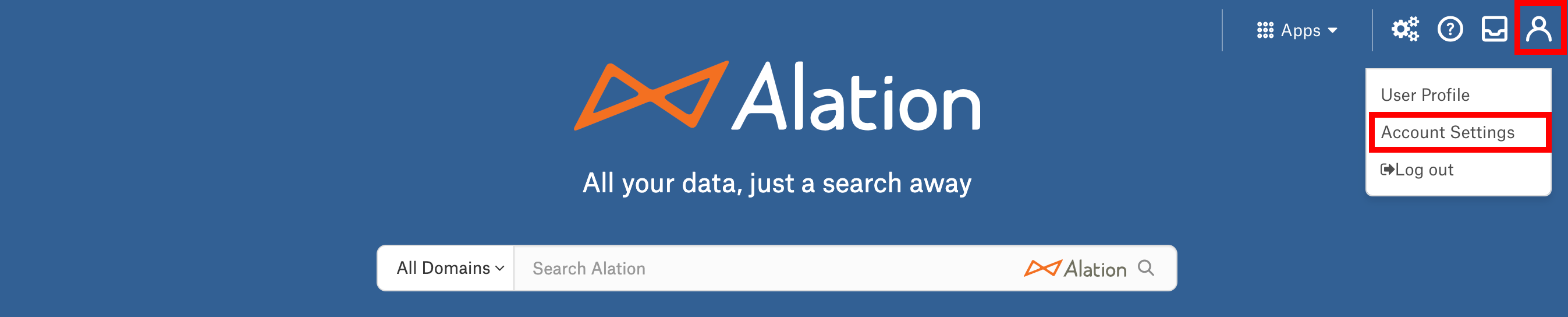

Click the My Account icon on the top right corner and select Account Settings.

Go to the Authentication tab and click Create Refresh Token.

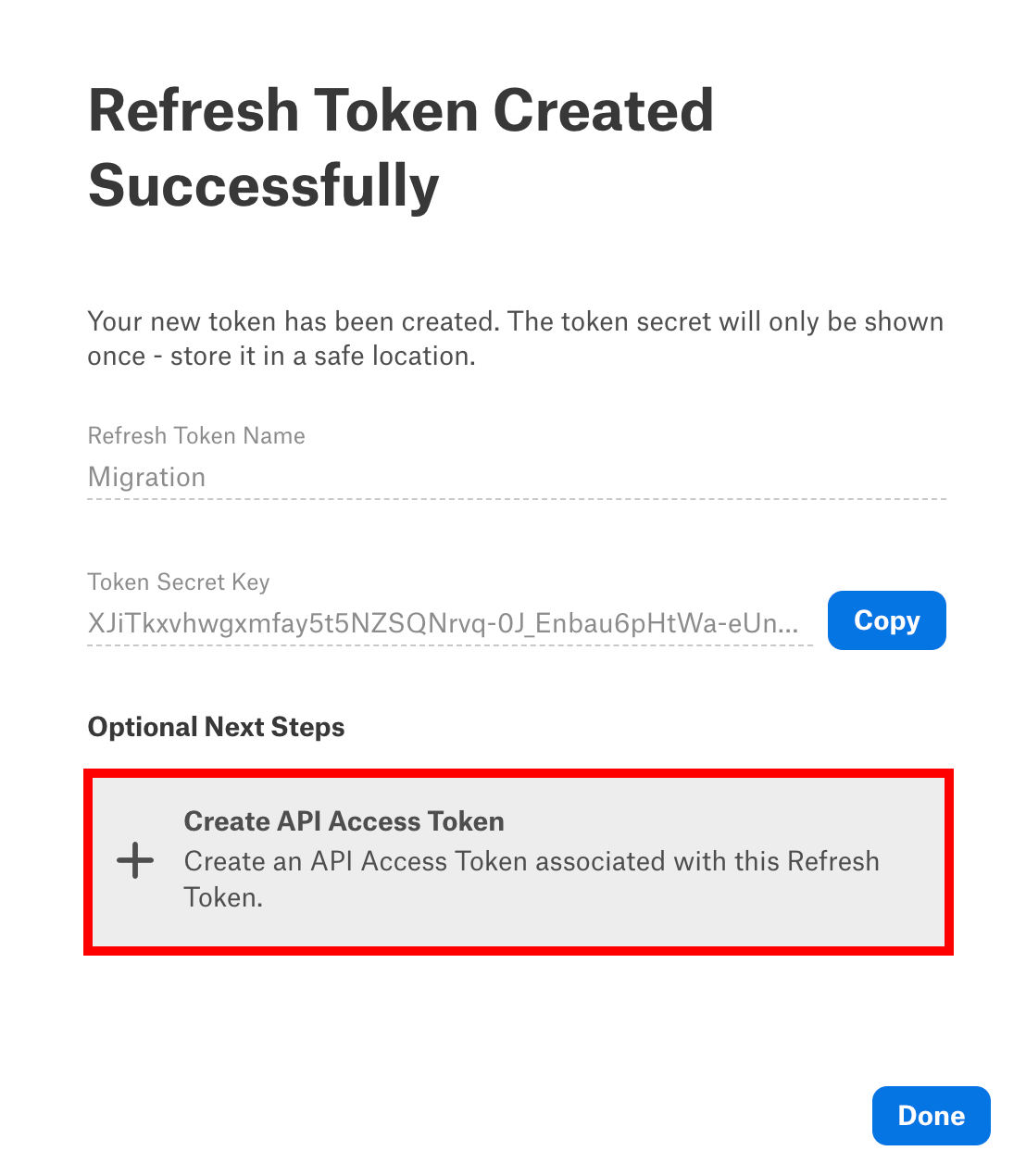

Provide a Refresh Token Name and click Create.

In the dialog that opens, click Create API Access Token.

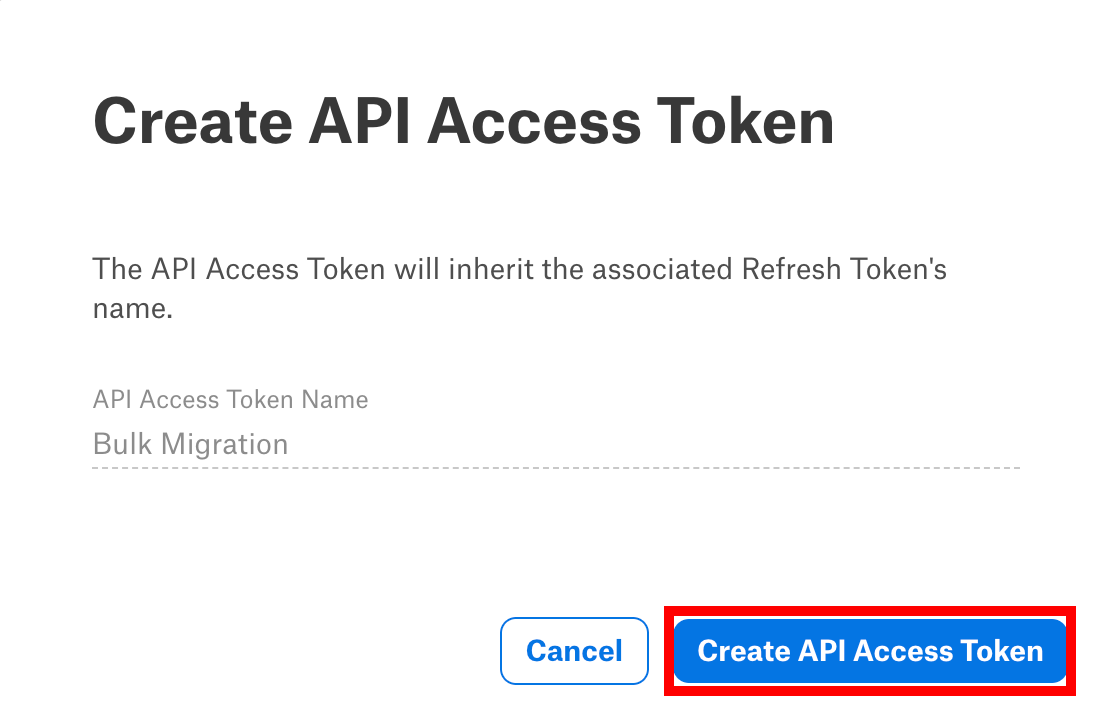

In the next dialog, click Create API Access Token.

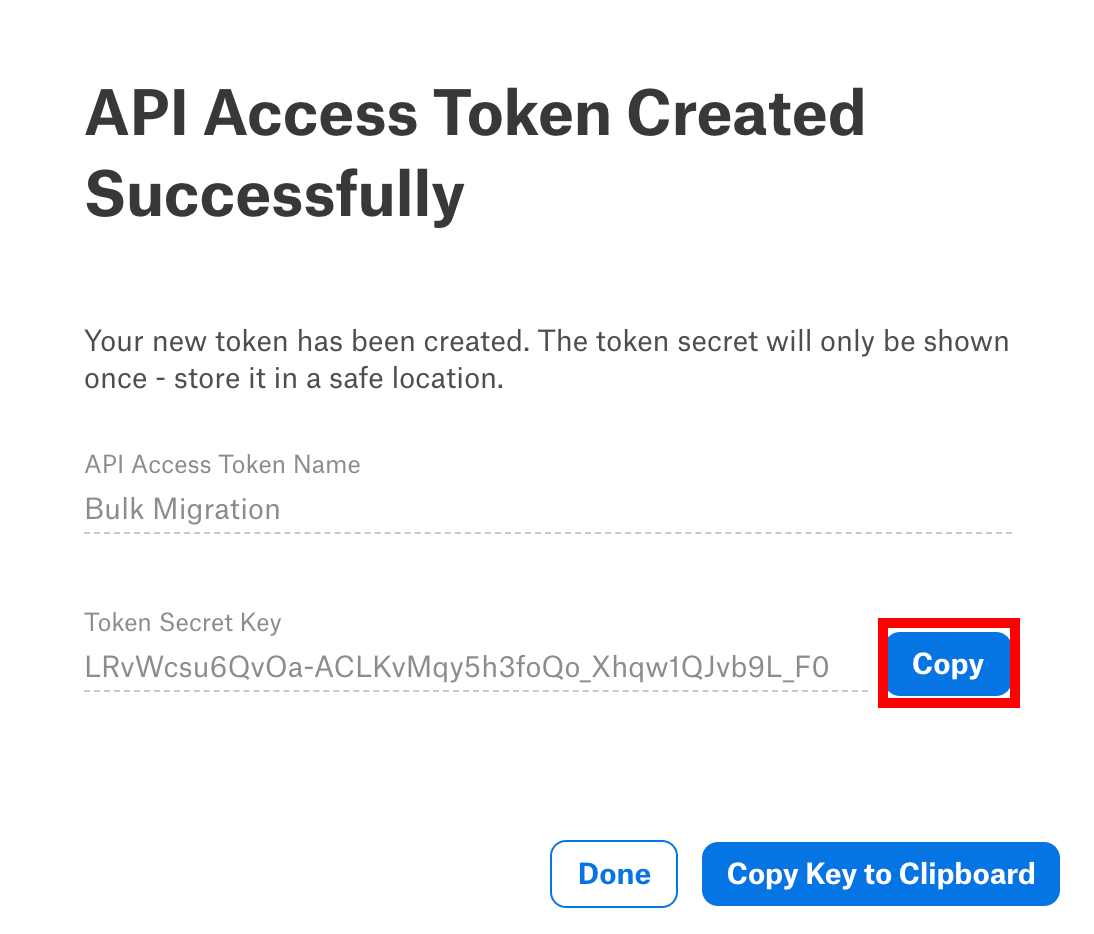

Click Copy to copy the Token Secret Key and save the key on your local machine.

Next, you can use the public API to migrate your data sources. Refer to Bulk Migrate Data Sources to OCF on Developer Portal for details.

Troubleshooting¶

The script will generate a log file at /opt/alation/site/logs location with name native_to_ocf_migration.log. This log will have all the logs for script execution.

In the script output, you can see the summary at the end where you can find successful, failed, and skipped data sources during migration.

To troubleshoot migration failure, please check the ypireti.log file, this file is at the /opt/alation/site/logs.

Find examples of errors in the table below:

Error |

Cause |

Solution |

|---|---|---|

Unable to proceed further due to an invalid database type. |

User provided a wrong database type or an unsupported database type during the script execution. |

Make sure that the database type that you migrate is supported for migration. Refer to the list of supported data sources. |

There are no native data sources of type {DB_TYPE} found or existing. |

The {DB_TYPE} for native data source provided by the user is not available for the given database in the instance. |

Make sure that the database type that you migrate is supported for migration. Refer to the list of supported data sources. |

There are no valid OCF connectors installed for database type {DB_TYPE}. |

If the equivalent OCF connector is not installed for a given database type. |

Install the OCF connector from Admin Settings > Manage Connectors. |

If unable to proceed further due to an invalid connector id. |

User provided an invalid connector id. |

Check the list of installed OCF connectors from the Alation UI dashboard and provide the correct connector id. |

There are no native data sources of type {DB_TYPE} is found with BASIC(Username/Password) auth type, not proceeding further. |

If there are no native data source with basic(username and password) authentication type. |

No fix required as there are no data sources with basic authentication is available. You might have data sources for this type but those may have different authentication like Kerberos which is not supported for bulk migration. |

Unable to proceed further due to invalid input. |

The skip connector ids provided by the user are not in the expected format. |

You need to provide the connector ids as a list separated by commas for datasources_to_skip key in the request body. Example: 1,2,3 |

Unable to proceed further because no confirmation is provided. |

User did not provide the confirmation. |

Not applicable |

Validation¶

After the migration, perform the following validations:

Single Data Source Migration¶

Supported Data Sources¶

The data sources listed in the following table are supported for single data source migration of the data source to OCF connector:

Native Data Source Name |

Equivalent OCF Connector |

|---|---|

Azure SQL Data Warehouse |

Synapse OCF Connector |

Google BigQuery |

Google BigQuery OCF Connector |

DB2 |

DB2 OCF Connector |

Databricks |

Databricks OCF Connector |

Greenplum |

Greenplum OCF Connector |

Hive2 |

Hive OCF Connector |

Impala |

Impala OCF Connector |

MemSQL |

MemSQL OCF Connector |

MySQL |

MySQL OCF Connector |

Oracle |

Oracle OCF Connector |

PostgreSQL |

PostgreSQL OCF Connector |

Redshift |

Redshift OCF Connector |

SAP HANA |

SAP HANA OCF Connector |

SAS |

SAS OCF Connector |

Snowflake |

Snowflake OCF Connector |

SQL Server |

SQL Server OCF Connector |

Sybase IQ |

Sybase IQ OCF Connector |

Sybase ASE |

SybaseASE OCF Connector |

Teradata |

Teradata OCF Connector |

Vertica |

Vertica OCF Connector |

Perform Single Data Source Migration¶

You can use the single data source migration method if you want to migrate only one data source at a time. Single data source migration can be performed for on-premise installations of Alation or Alation Cloud Service instances, you can migrate using the user interface.

Note

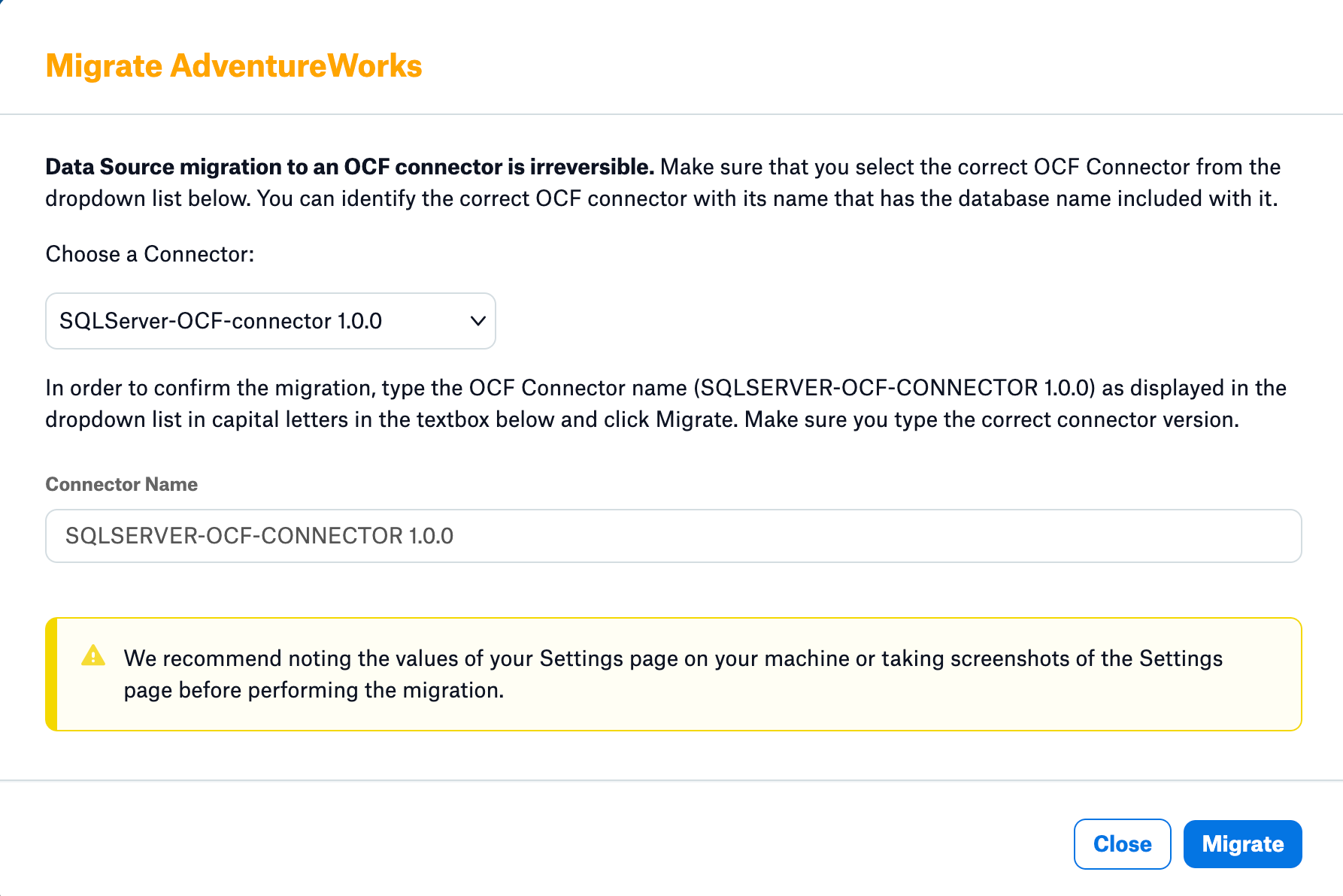

Migration to an OCF connector is irreversible.We recommend saving the settings of your source or taking screenshots of the Settings page before performing the migration.

Migrate a Source Using the Alation UI¶

To use this method, a Server Admin must first enable it:

As a Server Admin, click the gear icon in the top right corner to open the Admin Settings page.

Click Feature Configuration.

Enable the Enable Native Connector Migration to OCF Connector toggle.

Click Save Changes.

You must be a Server Admin to perform the migration. To migrate your data source to OCF using the Alation interface:

Note

Migration to an OCF connector is irreversible.We recommend saving the settings of your source or taking screenshots of the Settings page before performing the migration.

Select the RDBMS data source or File System source that you want to migrate.

Go to the General Settings page of the RDBMS or File System source.

Important

This is a good time to take screenshots or otherwise take note of the current settings for your data source. Some settings will not be migrated. You will need to manually reconfigure any settings that aren’t migrated.

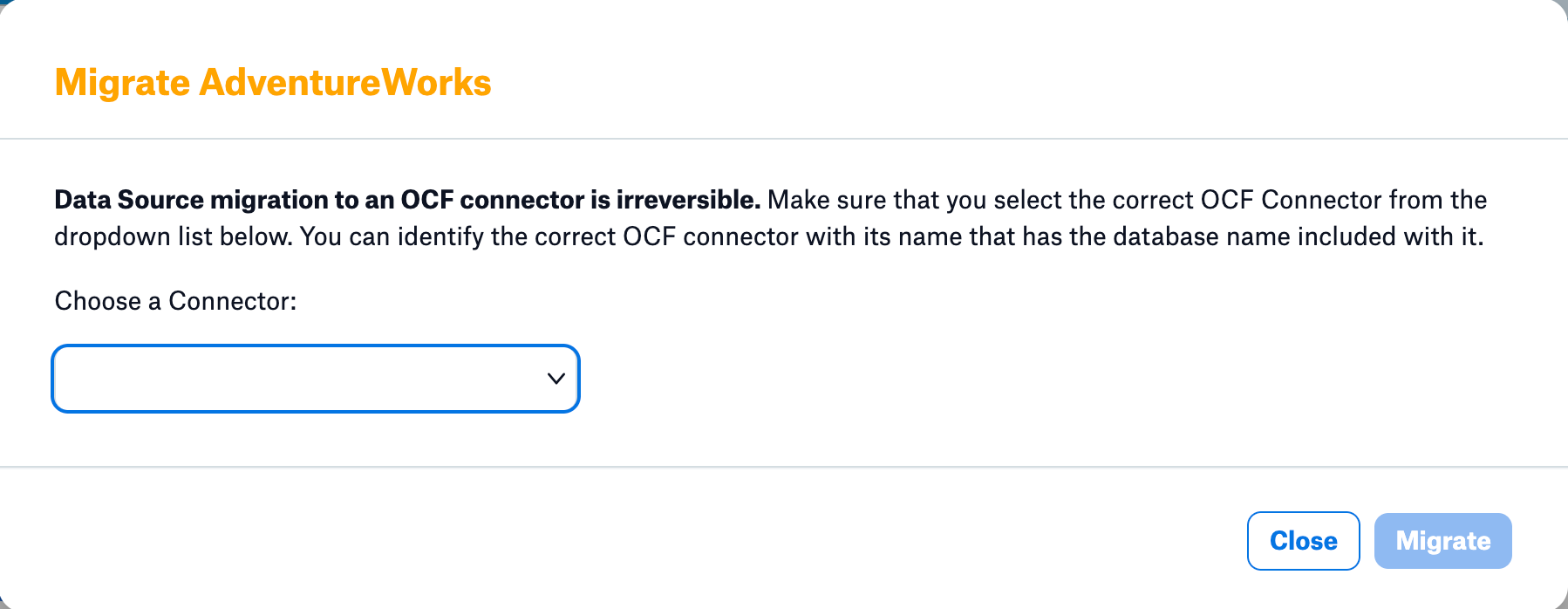

Scroll down to the bottom of the page and Click Migrate. The migration dialog appears.

Using the Choose a Connector drop-down menu, select the OCF connector you’re migrating to. The connector name indicates what type of database it supports. If the needed OCF connector is unavailable in the drop-down list, you must first install the connector. See Manage Connectors for directions.

To verify that you’ve chosen the right connector, type in the entire connector name and version in capital letters in the Connector Name field. Type the exact name as shown in the Choose a Connector drop-down menu. This will enable the Migrate button.

Click Migrate.

The data source will be migrated to the chosen OCF connector, and the OCF data source settings page will open. Check the notes you made of your previous settings and reconfigure any settings that were not migrated.

Migration Log Location¶

For on-premise installations of Alation, the migration logs are available in the ocf.log file at /opt/alation/site/logs location within the alation shell.

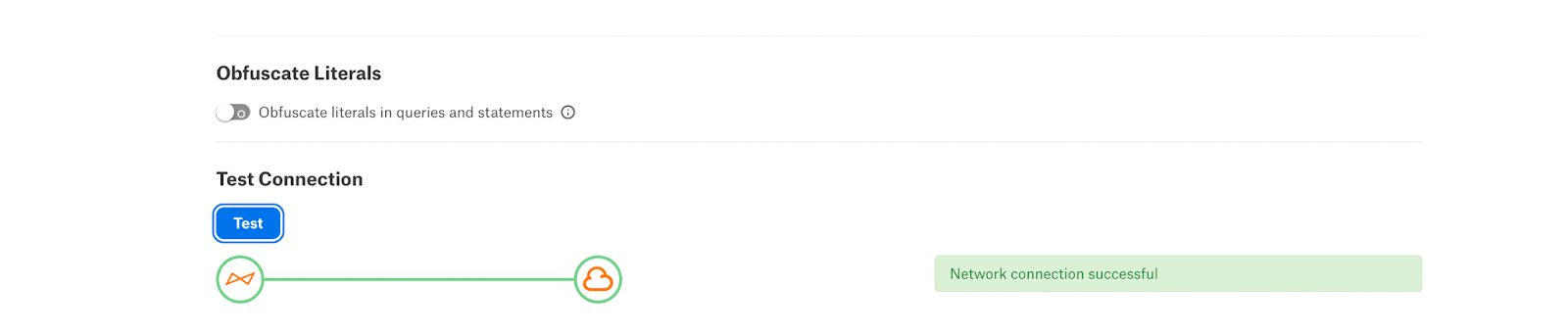

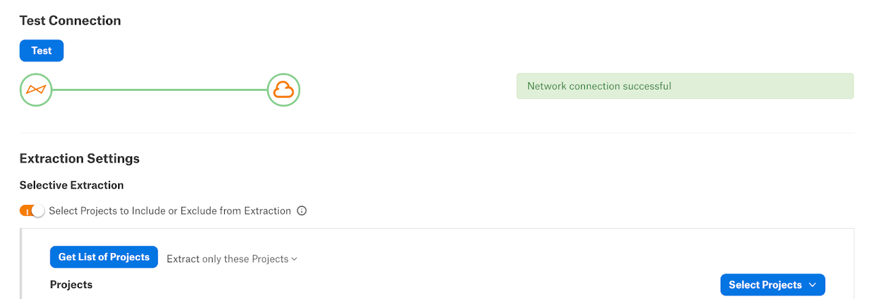

Validate Connection after Migration¶

After the migration, validate the connection between Alation and the database. To test the connection:

In the Alation user interface, go to the Settings page of the source that was migrated.

Scroll down to the Test Connection section.

Under Test Connection, click Test. The test should return the message Network connection successful.

If the connection fails, check and update the connection settings and try again. You can also check the connector logs for any specific connection errors. For RDBMS data sources, to view the connector logs, click the link on top of the Settings page to go to the corresponding connector page in Admin Settings > Manage Connectors.

Troubleshooting¶

For on-premise instances, the migration logs are available in the ypireti.log, ypireti ocf.log file at /opt/alation/site/logs location within the alation shell. For migration failure, check logs from this file and reach out to the Alation Support for further assistance.

Post Migration Activity¶

Upload the SSL certificates in the General Settings page of your OCF data source if the native data source is authenticated with SSL.

For Google BigQuery data sources, if you use the User Account Certificate, you need to set up the OAuth configuration manually post-migration. See Create an OAuth Configuration for Extraction and Compose for more details.

For SQL Server data sources, if it is connected using Basic or NTLM authentication, append

encrypt=falseto the JDBC URI of the OCF data source.

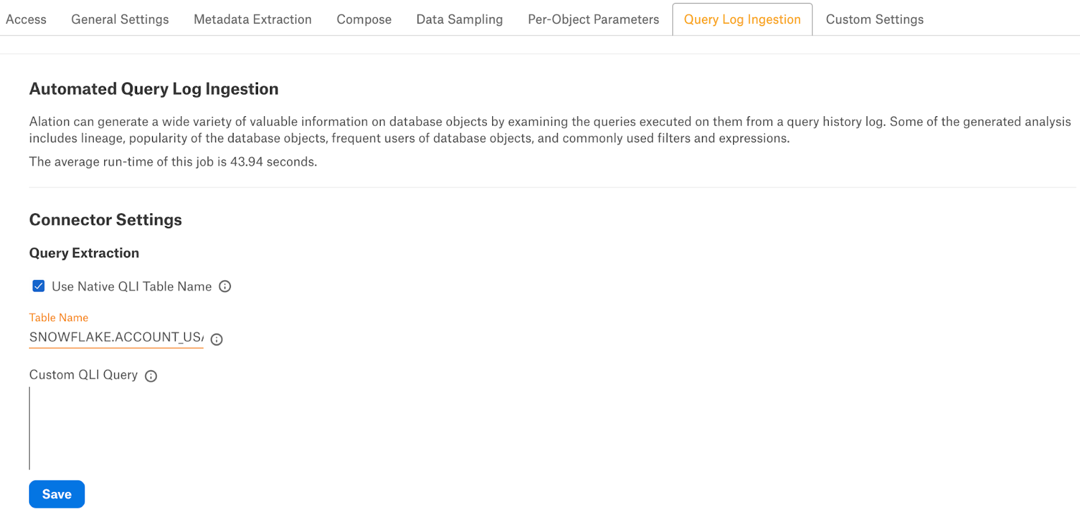

Use Native QLI Table Name¶

Applies from version 2023.1.4

For some data source types that were migrated from their respective native connectors to OCF, you can reuse the existing query log ingestion (QLI) configuration. If the OCF connector that a data source was migrated to supports this capability, you will see the checkbox Use Native QLI Table Name on the Query Log Ingestion tab of the data source Settings page.

The Use Native QLI Table Name functionality is available with these OCF connectors:

Native Data Source

OCF Connector Name

Available from Connector Version

Greenplum

Greenplum OCF connector

1.0.5.4329

MySQL

MySQL OCF connector

1.3.0.3139

Oracle

Oracle OCF connector

1.4.0.3146

PostgreSQL

PostgreSQL OCF connector

1.1.2.3886

SAP ASE (Sybase ASE)

Sybase ASE OCF connector

1.1.1.3246

Snowflake

Snowflake OCF connector

1.1.2.4595

SQL Server

SQL Server OCF connector

1.2.0.3185

Vertica

Vertica OCF connector

1.2.1.3246

After you migrate your native data source, you’ll see that the checkbox Use Native QLI Table Name checkbox is already auto-selected. You should also see the name of the existing QLI table was transferred into the Table Name field.

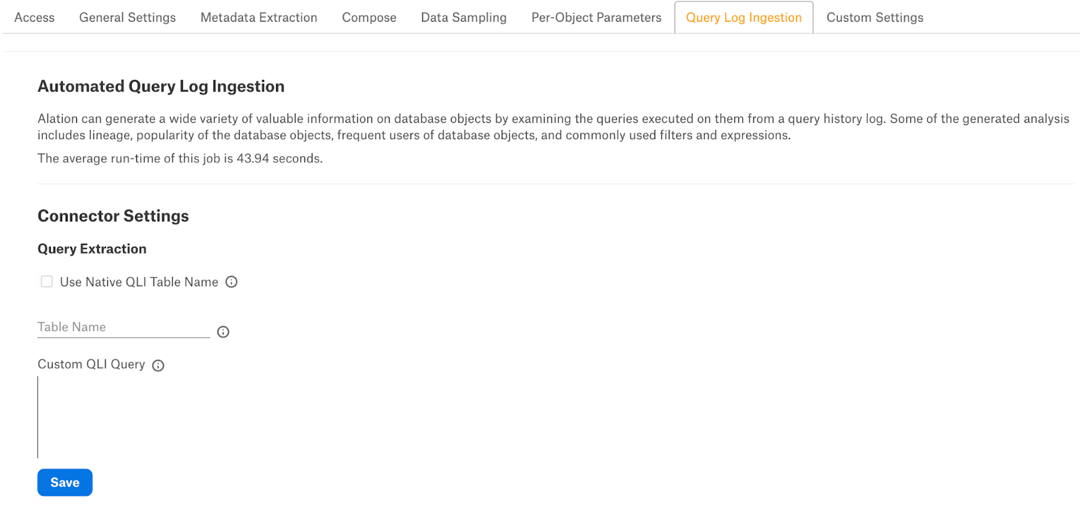

Note that for a completely new OCF data source, this is different. The checkbox Use Native QLI Table Name and the Table Name field will be present but clear.

Note

For a newly added OCF data source, you can still use this feature if you previously set up QLI for the same database and the corresponding native connector.

For a number of data sources, the QLI configuration done on the native connector automatically applies after the data source migration. For such data sources, the QLI table or view name will be migrated into the Table Name field, but the checkbox Use Native QLI Table Name checkbox will not be present. These data sources are:

Native Data Source

OCF Connector Name

Amazon Redshift

Redshift OCF connector

SAP HANA

SAP HANA OCF connector

Teradata

Teradata OCF connector

You can test the QLI configuration after migrating the data source by running QLI for the next available date range.