Configure Connection to Data Source¶

Alation Cloud Service Applies to Alation Cloud Service instances of Alation

Customer Managed Applies to customer-managed instances of Alation

After you install the Elasticsearch OCF connector, you must configure the connection to the Elasticsearch data source.

The various steps involved in configuring the Elasticsearch data source connection setting are:

Provide Access¶

Go to the Access tab on the Settings page of your Elasticsearch data source, set the data source visibility using these options:

Public Data Source — The data source is visible to all users of the catalog.

Private Data Source — The data source is visible to the users allowed access to the data source by Data Source Admins.

You can add new Data Source Admin users in the Data Source Admins section.

Connect to Data Source¶

To connect to the data source, you must perform these steps:

Application Settings¶

Specify Application Settings if applicable. Save the changes after providing the information by clicking Save.

Specify Application Settings if applicable. Click Save to save the changes after providing the information.

Parameter |

Description |

|---|---|

BI Connection Info |

This parameter is used to generate lineage between the current data source and another source in the catalog, for example a BI source that retrieves data from the underlying database. The parameter accepts host and port information of the corresponding BI data source connection. Use the following format: You can provide multiple values as a comma-separated list:

Find more details in BI Connection Info. |

Disable Automatic Lineage Generation |

Select this checkbox to disable automatic lineage generation from QLI, MDE, and Compose queries. By default, automatic lineage generation is enabled. |

Connector Settings¶

Datasource Connection¶

Not applicable.

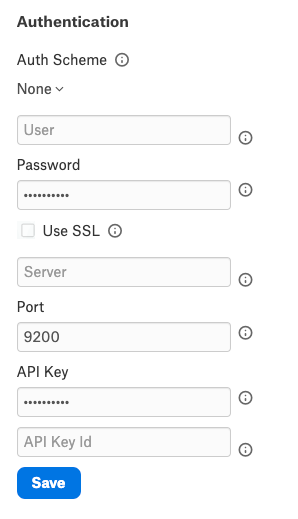

Authentication¶

Specify Authentication Settings. Save the changes after providing the information by clicking Save.

Parameter |

Description |

|---|---|

Auth Scheme |

Select the auth scheme used for authentication with the Kafka broker from the dropdown.

See Appendix - Authentication Schemes to know more about the configuration fields required for each authentication scheme. |

User |

Specify the username to authenticate Elasticsearch. |

Password |

Specify the password that authenticates Elasticsearch. |

Use SSL. |

Select this check box to use SSL/TSL authentication. |

Server |

The host name or IP address of the Elasticsearch REST server. Alternatively, multiple nodes in a single cluster can be specified, though all such nodes must be able to support REST API calls. |

Port |

The port for the Elasticsearch REST server. |

API Key |

Specify the API Key used to authenticate to Elasticsearch. |

API Key Id |

Specify the API Key ID to authenticate to Elasticsearch. |

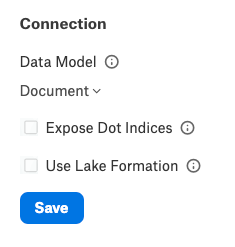

Connection¶

Specify Connection properties. Save the changes after providing the information by clicking Save.

Parameter |

Description |

|---|---|

Data Model |

Specifies the data model to use when parsing Elasticsearch documents and generating the database metadata. |

Expose Dot Indices |

Select this checkbox to expose the dot indices as tables or views. |

Use Lake Formation |

Select this checkbox to use the AWS Lake Formation service to retrieve temporary credentials, which enforce access policies against the user based on the configured IAM role. The service can be used when authenticating through OKTA, ADFS, AzureAD, and PingFederate while providing a SAML assertion. |

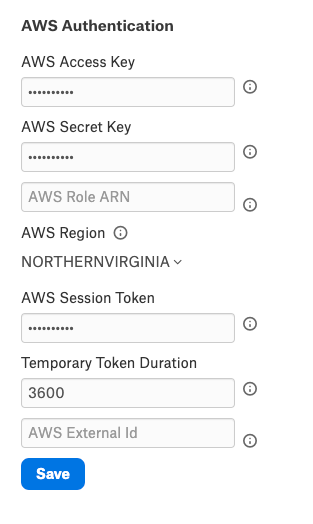

AWS Authentication¶

Specify AWS Authentication properties. Save the changes after providing the information by clicking Save.

Parameter |

Description |

|---|---|

AWS Access Key |

Specify the AWS account access key. This value is accessible from your AWS security credentials page. |

AWS Secret Key |

Specify the AWS account secret key. This value is accessible from your AWS security credentials page. |

AWS Role ARN |

Specify the Amazon Resource Name of the role to use when authenticating. |

AWS Region |

Specify the hosting region for your Amazon Web Services. |

AWS Session Token |

Specify the AWS session token. |

Temporary Token Duration |

Specify the amount of time (in seconds) an AWS temporary token will last. |

AWS External Id |

Specify the unique identifier required when you assume a role in another account. |

Kerberos¶

Not supported.

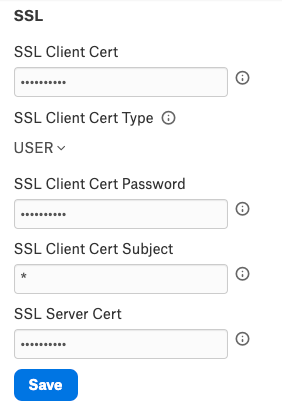

SSL¶

Specify SSL properties. Save the changes after providing the information by clicking Save.

Parameter |

Description |

|---|---|

SSL Client Cert |

Specify the TLS/SSL client certificate store for SSL Client Authentication (2-way SSL). |

SSL Client Cert Type |

Select the type of key store containing the TLS or SSL client certificate from the drop-down. |

SSL Client Cert Password |

Specify the password for the TLS or SSL client certificate. |

SSL Client Cert Subject |

Specify the subject of the TLS or SSL client certificate. |

SSL Server Cert |

Specify the certificate to be accepted from the server when connecting using TLS or SSL. |

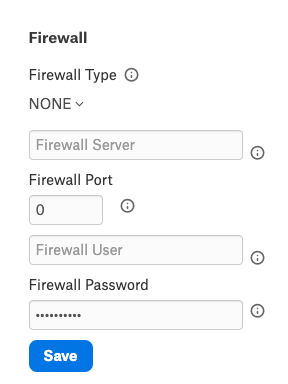

Firewall¶

Specify Firewall properties. Save the changes after providing the information by clicking Save.

Parameter |

Description |

|---|---|

Firewall Type |

Specify the protocol used by a proxy-based firewall.

|

Firewall Server |

Specify the hostname, DNS name, or IP address of the proxy-based firewall. |

Firewall Port |

Specify the TCP port of the proxy-based firewall. |

Firewall User |

Specify the user name to authenticate with the proxy-based firewall. |

Firewall Password |

Specify the password to authenticate with the proxy-based firewall. |

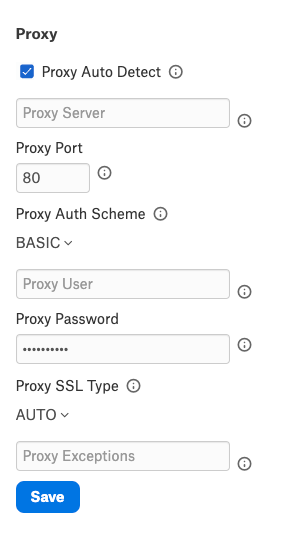

Proxy¶

Specify Proxy properties. Save the changes after providing the information by clicking Save.

Parameter |

Description |

|---|---|

Proxy Auto Detect |

Select the checkbox to use the system proxy settings. This takes precedence over other proxy settings, so do not select this checkbox to use custom proxy settings. |

Proxy Server |

Specify the hostname or IP address of a proxy to route HTTP traffic through. |

Proxy Port |

Specify the TCP port the Proxy Server proxy is running on. |

Proxy Auth Scheme |

Select the authentication type to authenticate to the Proxy Server from the drop-down:

|

Proxy User |

Specify the user name to authenticate the Proxy Server. |

Proxy Password |

Specify the password of the Proxy User. |

Proxy SSL Type |

Select the SSL type when connecting to the ProxyServer from the drop-down:

|

Proxy Exceptions |

Specify the list (separated by semicolon) of destination hostnames or IPs that are exempt from connecting through the Proxy Server. |

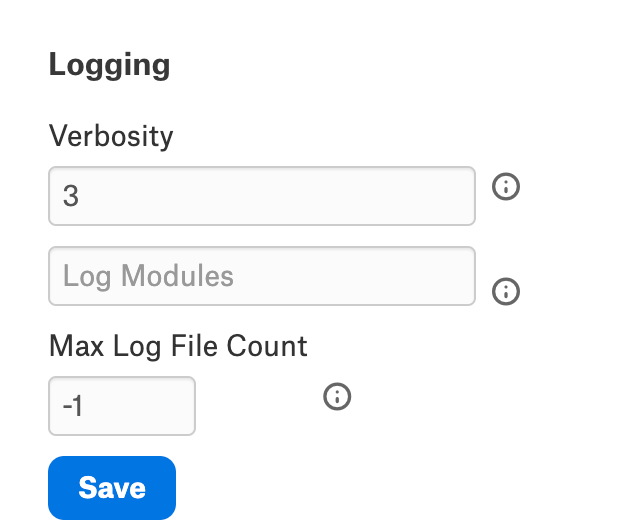

Logging¶

Specify Logging properties. Save the changes after providing the information by clicking Save.

Parameter |

Description |

|---|---|

Verbosity |

Specify the verbosity level between 1 to 5 to include details in the log file. |

Log Modules |

Includes the core modules in the log files. Add module names separated by a semi-colon. By default, all modules are included. |

Max Log File Count |

Specify the maximum file count for log files. After the limit, the log file is rolled over, and time is appended at the end of the file. The oldest log file is deleted. Maximum Value: 2 Default: -1. A negative or zero value indicates unlimited files. |

View the connector logs in Admin Settings > Server Admin > Manage Connectors > Kafka OCF connector.

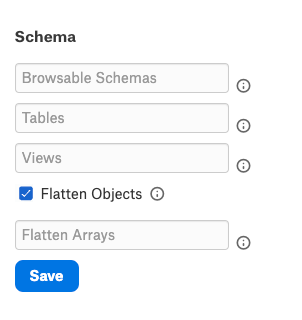

Schema¶

Specify Schema properties. Save the changes after providing the information by clicking Save.

Parameter |

Description |

|---|---|

Browsable Schemas |

Specify the schemas as a subset of the available schemas in a comma-separated list. For example, BrowsableSchemas=SchemaA,SchemaB,SchemaC. |

Tables |

Specify the fully qualified name of the table as a subset of the available tables in a comma-separated list. Each table must be a valid SQL identifier that might contain special characters escaped using square brackets, double quotes, or backticks. For example, Tables=TableA,[TableB/WithSlash],WithCatalog.WithSchema.`TableC With Space`. |

Views |

Specify the fully qualified name of the Views as a subset of the available tables in a comma-separated list. Each view must be a valid SQL identifier that might contain special characters escaped using square brackets, double quotes, or backticks. For example, Views=ViewA,[ViewB/WithSlash],WithCatalog.WithSchema.`ViewC With Space`. |

Flatten Objects |

Select the Flatten Objects checkbox to flatten object properties into their columns. Otherwise, objects nested in arrays are returned as strings of JSON. |

Flatten Arrays |

Set Flatten Arrays to the number of nested array elements you want to return as table columns. By default, nested arrays are returned as strings of JSON. |

Miscellaneous¶

Specify Miscellaneous properties. Save the changes after providing the information by clicking Save.

Parameter |

Description |

|---|---|

Batch Size |

Specify the maximum size of each batch operation to submit. When BatchSize is set to a value greater than 0, the batch operation will split the entire batch into separate batches of size BatchSize. The split batches will then be submitted to the server individually. This is useful when the server has limitations on the request size that can be submitted. Setting BatchSize to 0 will submit the entire batch as specified. |

Client Side Evaluation |

Select the Client Side Evaluation checkbox to perform the evaluation client side on nested objects. |

Connection LifeTime |

The maximum lifetime of a connection in seconds. Once the time has elapsed, the connection object is disposed. The default is 0, indicating no limit to the connection lifetime. |

Generate Schema Files |

Select the user preference for when schemas should be generated and saved.

|

Maximum Results |

The maximum number of total results to return from Elasticsearch when using the default Search API. |

Max Rows |

Limits the number of rows returned when no aggregation or GROUP BY is used in the query. This takes precedence over LIMIT clauses. |

Other |

This field is for properties that are used only in specific use cases. |

Page size |

Specify the maximum number of rows to fetch from Kafka. The provider batches read to Kafka to reduce overhead. Instead of fetching a single row from the broker every time a query row is read, the provider will read multiple rows and save them to the resultset. Only the first row read from the resultset must wait for the broker. Later rows can be read out of this buffer directly. This option controls the maximum number of rows the provider stores on the resultset. Setting this to a higher value will use more memory but requires waiting on the broker less often. Lower values will give lower throughput while using less memory. |

Pagination Mode |

Specifies whether to use PIT with search_after or scroll to the page through query results. |

PIT Duration |

Specifies the time unit to keep alive when retrieving results via PIT API. |

Pool Idle Timeout |

The allowed idle time for a connection before it is closed. |

Pool Max Size |

The maximum connections in the pool. The default is 100. |

Pool Min Size |

The minimum number of connections in the pool. The default is 1. |

Pool Wait Time |

The max seconds to wait for an available connection. The default value is 60 seconds. |

Pseudo Columns |

This property indicates whether or not to include pseudo columns as columns to the table. |

Query Passthrough |

Select this checkbox to pass exact queries to Elasticsearch. |

Read only |

Select this checkbox to enforce read-only access to Kafka from the provider. |

Row Scan Depth |

Specify the maximum number of messages to scan for the columns in the topic. Setting a high value may decrease performance. Setting a low value may prevent the data type from being determined properly. |

Scroll Duration |

Specifies the time unit to keep alive when retrieving results via the Scroll API. |

Timeout |

Specify the value in seconds until the timeout error is thrown, canceling the operation. |

Use Connection Pooling |

Select this checkbox to enable connection pooling. |

Use Fully Qualified Nested Table Name |

Select this checkbox to set the generated table name as the complete source path when flattening nested documents using Relational DataModel. |

User Defined Views |

Specify the file path pointing to the JSON configuration file containing your custom views. |

Obfuscate Literals¶

Obfuscate Literals — Enable this toggle to hide the details of the queries in the catalog page that are ingested via QLI or executed in Compose. Disabled by default.

Test Connection¶

Under Test Connection, click Test to validate network connectivity.

Note

You can only test connectivity after providing the authentication information.

Metadata Extraction¶

This connector supports metadata extraction (MDE) based on default queries built in the connector code but does not support custom query-based MDE. You can configure metadata extraction on the Metadata Extraction tab of the settings page.

For more information about the available configuration options, see Configure Metadata Extraction for OCF Data Sources.

Compose¶

For details about configuring the Compose tab of the Settings page, refer to Configure Compose for OCF Data Sources.

Sampling and Profiling¶

Sampling and profiling is supported. For details, see Configure Sampling and Profiling for OCF Data Sources.

Query Log Ingestion¶

Not supported.

Troubleshooting¶

Refer to Troubleshooting for information about logs.